Electronic Music in Spatial Audio

Thoughts, ideas, graphical scores, ambisonics and projects

WADADA LEO SMITHANKHRASMATION: THE LANGUAGE SCORES, 1967-2015

Introduction

This article will discuss the conceptual practices and techniques implemented to create a piece of music in the audio format of ambisonics, exploring the future of performance for electronic dance music in immersive surround sound. Early last year, I visited the EartH Theatre in Hackney, one of the only venues with L-Acoustics L-iSa Hyperreal Immersive Sound Technology installed and became inspired by the performances of electronic music artists Suzanne Ciani, Dan Samsa and Tvoya. All of whom utilized the technology to create an immersive electronic music experience for the audience. Attaining to the concepts and ideologies of spectromorphology, experimental electronic music, and musique concreté. The purpose of the composition I have composed and mixed in ambisonics aims to explore live electronic dance music in an immersive format.

Concept

Electronic dance music holds great significance for the intersection between human interaction and technology and plays a vital role in the history and development of youth culture and experience (Butler, 2006, p. 6). Moreover, observing electronic dance music through a subcultural lens, attaining the likes of rave and trance culture. Attending such an event may evoke a phenomenological experience for those involved (Peters et al., 2012: St John, 2008). On the basis that the dancefloor becomes an escape from the mundane nine-to-five and responsibilities of life, involving a crowd of people that can feel connected by simply sharing a stimulating experience in synchronicity (St John, 2008). My composition, "Friends, and all", intends to evoke a similar experiential connection by manipulating and spatialising the sound field within my piece, adding a further dimension for listeners to become immersed in a live setting through the choice of rhythm, timbre and textures.

Furthermore, I intend to elevate and evoke the listener in a 'deep listening' experience, a term coined by Pauline Oliveros in 1989 to describe the process of listening with radical attentiveness (Oliveros, 2005, pp. 1-10). However, this effect of a deep listening experience can depend on the audience's connection to the music and themselves within the listening experience (ibid). As Oliveros discusses in "Deep Listening: a Composer's Practice" (ibid), when focusing on the tones and structure of music, you allow yourself to enter a heightened state of awareness that affects your emotional and physical responses. “Friends, and all '' has two contrasting sections. The first intends to initiate a deep listening state by using what is referred to in meditation music as 'sonic mantras', a repetition of notes, textures or voice samples are present to create a hypnotic-like and relaxing effect whilst keeping a foundation for the listener to focus (Guided Meditation, 2022).

Albeit the second section intends to heighten the listener's senses with an evolving bass line, increased rhythm, and percussive voice samples whilst still the previous synth textures and melody remain the foundation. As Oram (1972) theorizes in the essence of spirituality and music, humans are physical wavebands that can be "coerced into a harmonic relationship" with music and therefore carried with the direction of the music as it increases or decreases with intensity and tempo.

This article will now take a glance at the concept of spectromorphology concerning the format of 3rd order ambisonics in which I have chosen to work. However, it is necessary to overview a brief history of the development of ambisonics and the principles that differentiate it from other immersive audio formats.

Ambisonics

Ambisonics was first developed in the 1970s by a group of British Academics who were part of the British National Research and Development Cooperation (NRDC), more notably Michael Gerzon and Peter Fellgett (Oxford University, 2023: Rumsey, 2001). The system was designed to reproduce recordings in 3D spatial sound, intended as an extension of what is known as the Blumlein principle stereo format (ibid). It is a unique form of surround sound that aims to provide a hierarchical approach to reproducing directional sound. It is not limited to a particular number of audio transmission channels.

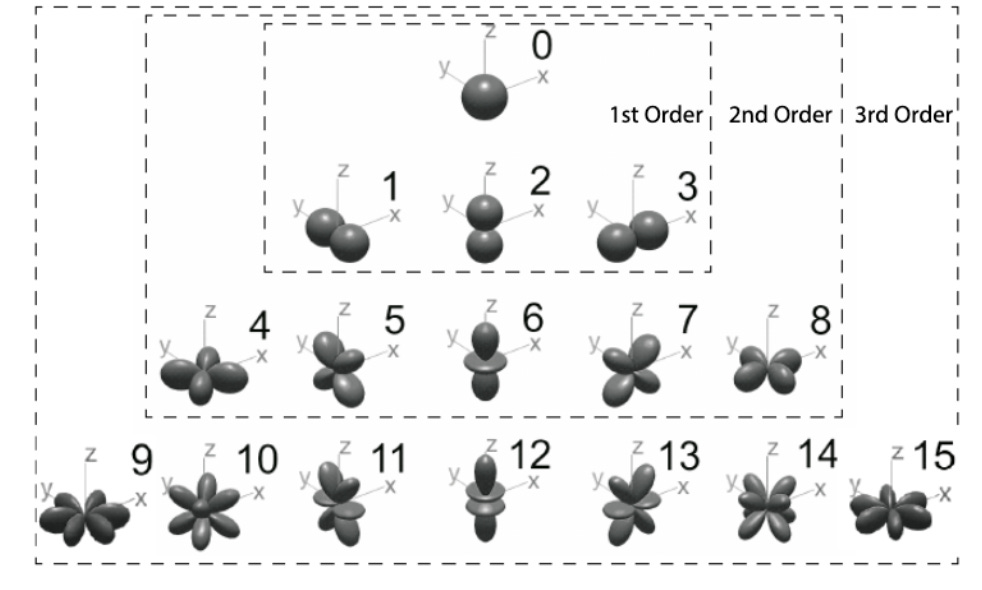

Therefore, it applies to mono, stereo and horizontal surround-sound formats, enabling a three-dimensional sound field that includes height information (Rumsey, 2001, p.111 ). Ambisonics works in a number of formats for transporting ambisonic signals; these include A, B, C and D (ibid). However, this project has been created and mixed in 3rd order B-format ambisonics as outlined below.

B-Format

B-format is the leading audio format for Higher Order Ambisonics and the format used for this project. In this format, the individual channels do not correspond to direct speaker feeds but instead contain the components of the ambisonic sound field that are then decoded for audio playback on speakers (Blue Ripple Sound, 2022). Moreover, it allows multi-channel audio to be generated, recorded and transferred to a combination of speaker configurations. Ambisonic audio also contains an ‘order’ component that allows for increased spatial detail, meaning that new channels are added when the order is increased (ibid). Therefore, in zero order, just one mono channel is present; in the first order, three additional channels are added; to make four. Each channel conducts as a figure of eight microphone (ibid).

3rd Order, B-Format Ambisonics: Configurations and Channel Reproduction

Brief Outline of A, C, and D Format;

A-Format: four channel cardioid microphone pickup - in the configuration of a tetrahedron, which corresponds in left-front (LF), right-front (RF), left-back (LB) and right-back (RB) (ibid).

C-Format: transmission of ambisonic audio signal.

D-Format: decoding and reproduction of ambisonic signals.

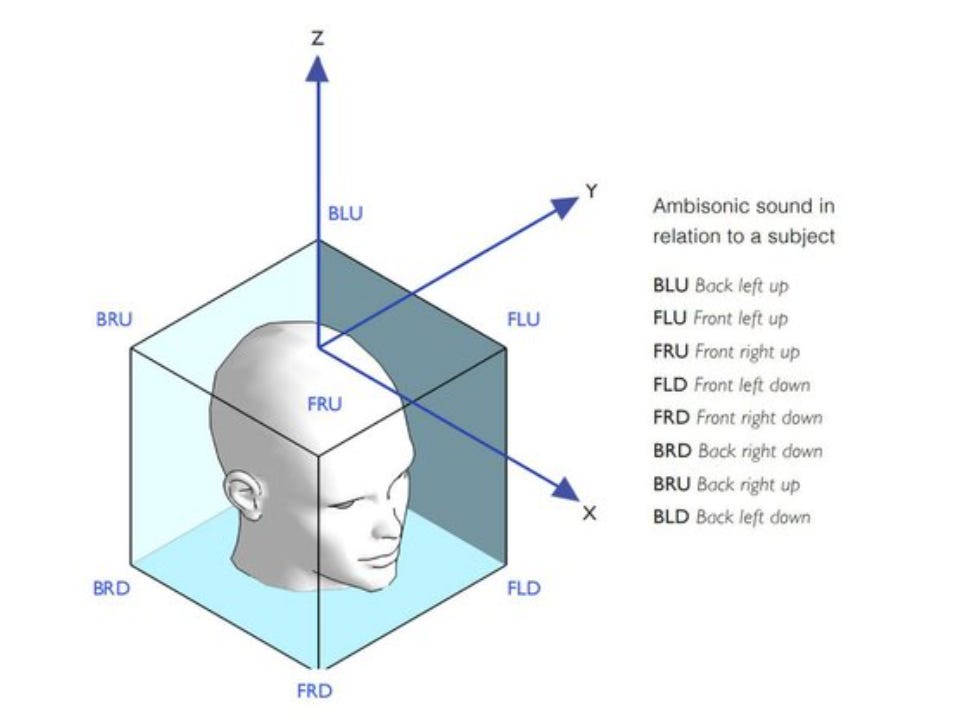

Ambisonic Sound in Relation to the Listener

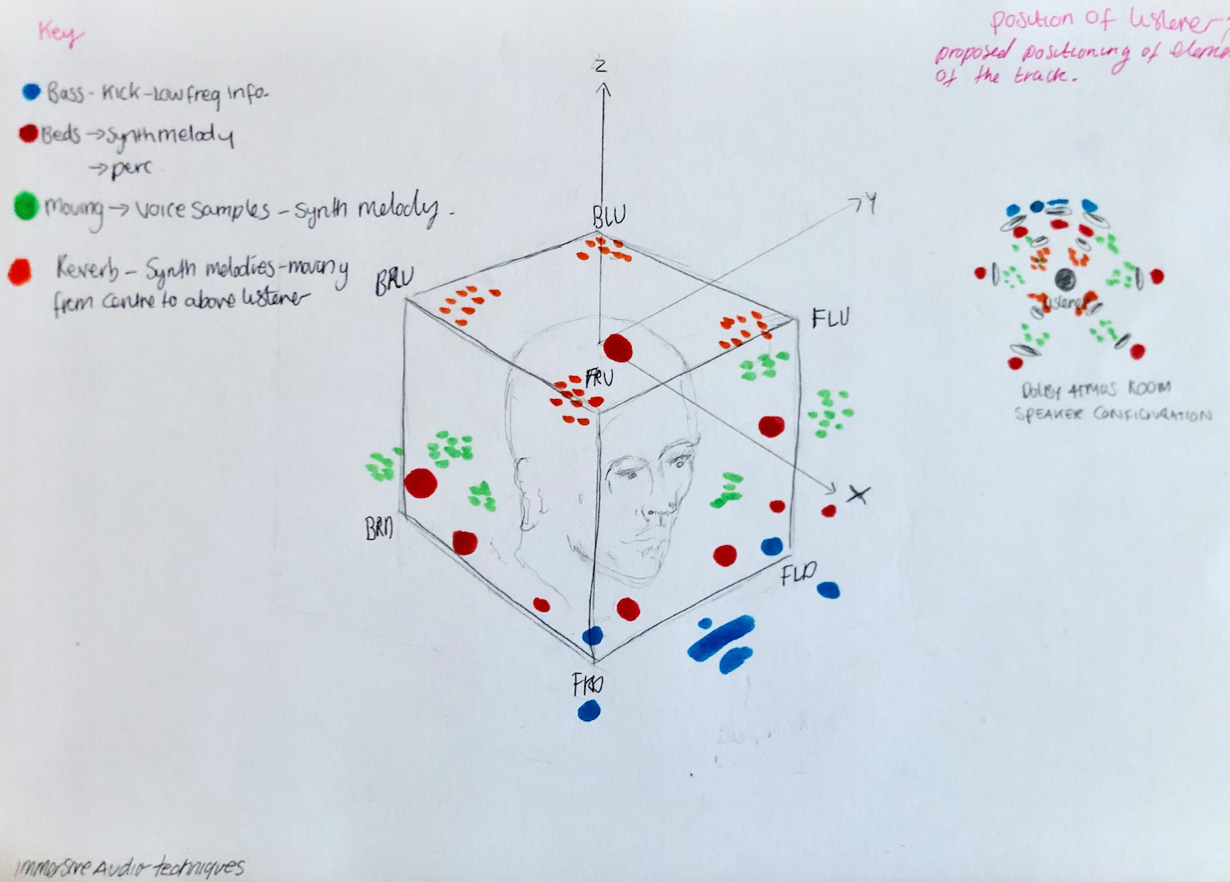

The diagram above has been used as a reference for the placement and spatialisation of the instruments and elements for my immersive project. Below is a planning draft of the spatialisation of the instrumentation and elements of “Friends, and all”, with reference to the above diagram.

Imagined Spatial Positioning in Ambisonics; ‘Friends, and all”.

This article will now discuss the concept and ideologies of spectromorphology and the phenomenology of the existing body concerning electronic music, as well as the conceptual practices of experimental music and musique concreté that have informed both the technical and creative production process of the mix.

Spectromorphology

Spectromorphology is a concept used to describe the aural perception of sounds within the spatial sound field (Smalley,1997: O’Callagahn, 2011). Furthermore, it apprehends the link between physical gesture and sound-making concerning how we perceive the sound's direction, distance, and cause (ibid). As described by Peters (et al., 2012, pp. 1-5), when we hear the beat of a drum, we also hear the embodiment of human gesture within the instrument's rhythm; as for electronic music, discourse surrounds the idea that it can therefore become disembodied. In the sense that physical touch or action is not the cause of sound (Peters et al., 2012, pp. 1-5). We may, furthermore, disassociate the perception of the sound and abandon the body as a cause (ibid).

This conceptual framework has informed my decisions about placing each instrument, rhythm, texture and voice within the ambisonics mix I have created. Therefore, it inspired me to announce my decision to showcase the piece using live midi mapping. To control the movement of some aspects of the ambisonic mix, although I am not the physical cause of the sound, I will embody the perception of the direction of some elements of the mix.

Experimental Music

A technique I have used to inspire and envision my composition initially is the practice of visual scoring. Experimental music composers of the likes of Karlheinz have used this technique in the creation and performance of their music; working against the syntax of traditionally structured music notation, visual scoring allowed for the re-interpretation of a piece of music that was dependent on the performer (Fabrizi, 2015). Albeit, I have yet to use this technique with the intention of the composition re-interpretation but instead to use color, shape and form as a way of sketching out the feeling, textures and placement of the structure.

Musique Concrete

The Musique Concréte movement began after world war II when the tape machine became an available technology to music artists (Holmes, 1985, p.77). It transformed electronic music because the tape machine became the instrument and only existed as a recording. In contrast, before, performing electronic music was in front of an audience using analogue synthesizers (ibid). Artists could take organic recordings, splice time stretch and manipulate them to create atonal compositional works using only the tape machine, and is noted as one of the earliest practices of audio sampling (ibid).

Although I have not used a tape machine in the production process of this piece, the concept and practice of sampling and recontextualizing organic recordings and samples of voice have been. Using the Ableton Simpler sampling plugin, I spliced an audio recording of a group of close friends on a night out. This recording has been sampled in conjunction with an audio segment taken from the film "It's a Wonderful Life" directed by Frank Capra (1946). The film explores a businessman's life overwhelmed by day-to-day stresses, causing him to contemplate his life and what it would be like if he had never existed (Pfeiffer, 2023).

Therefore, I intended to reference the phenomenological escapism that experiencing live electronic dance music can evoke juxtaposed with the idea of remaining present, emotionally and physically, with the music. These samples are one of the elements of the track in which I have automated the spatial azimuth (the direction, or thus positioning of a sound in the spherical ambisonic sound-field) using the envelop for Ableton Live Software, discussed in the technical specification section of the paper (Rumsey, 2001, pp. 67-72).

Artist Influences and L-iSa

As briefly mentioned, artists such as Suzanne Ciani, Halina Rice and Max Cooper are key references and inspirations to this project, not only for the sonic aesthetic of their music but the technology and immersive live performances (Pangburn and iZotope, 2018). Precisely that of Halina Rice, a London-based electronic music producer and audio-visual artist, who mixes and performs her music live using the L-Acoustics Studio 3D software. An immersive sound application that works in conjunction with the L-Acoustics Immersive Hyperreal speaker configuration like that of the one installed at EarTh Theatre in Hackney, London (L-iSa, 2022). Although I have not used this software to mix and perform my piece, I have chosen the technology accessible to me within the time frame. However, I plan to explore this software in future immersive projects as a way of performing electronic dance music. However, I have decided to use the live midi mapping feature within ableton to showcase my work in a live format.

Technical Specification

The Ableton Project

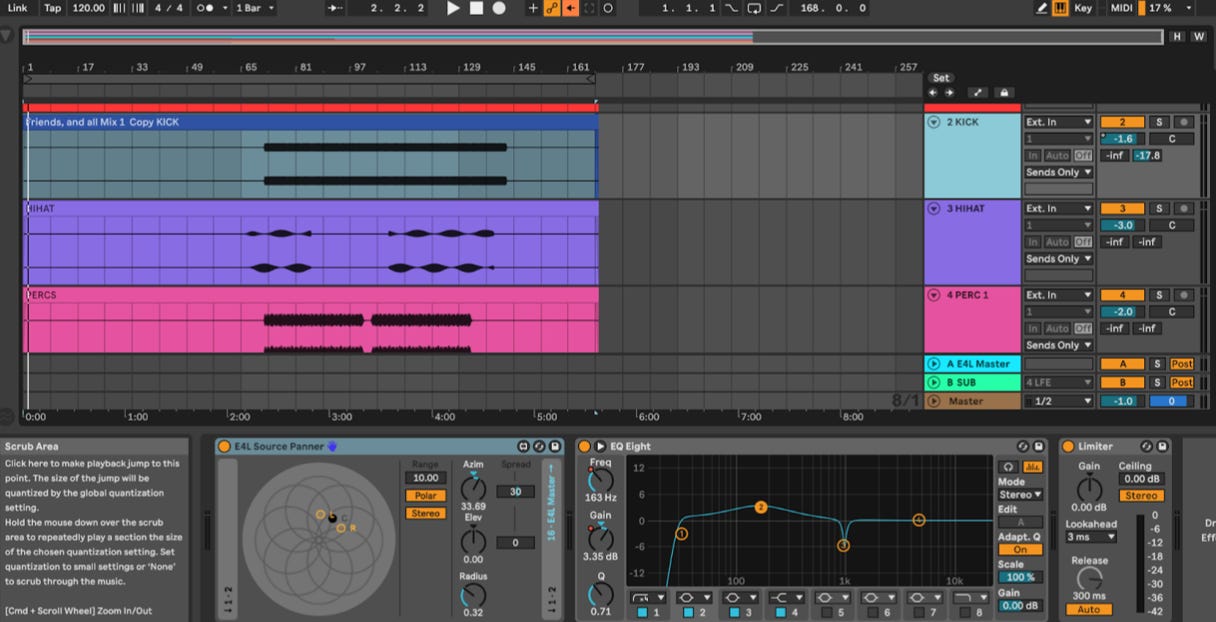

To mix my project in 3rd Order ambisonics, I have used the Envelop for Live (E4L) plugins for Ableton Live. A platform designed to allow composers and artists to mix music in an immersive format for spatial audio distribution, performance, virtual reality and sound design (Envelop, 2022). The software contains ambisonic effects, source, stereo and mono panner. These plugins allowed me to place elements within the ambisonic sound field, as well as automate the azimuth and movement features within each encoder plugin. A decoder in the E4L master bus then translates the ambisonic mix to the speaker configuration used (ibid).

Encoding: happens at the mixing process - when placing an E4L plugin onto a track.

Decoding: happens when audio is bounced - the E4L master bus decoder translates the audio to the appropriate speaker configuration. (Rumsey, 2001).

Screen capture of the Ableton Project - using the source panner to place the kick within the ambisonics sound-field.

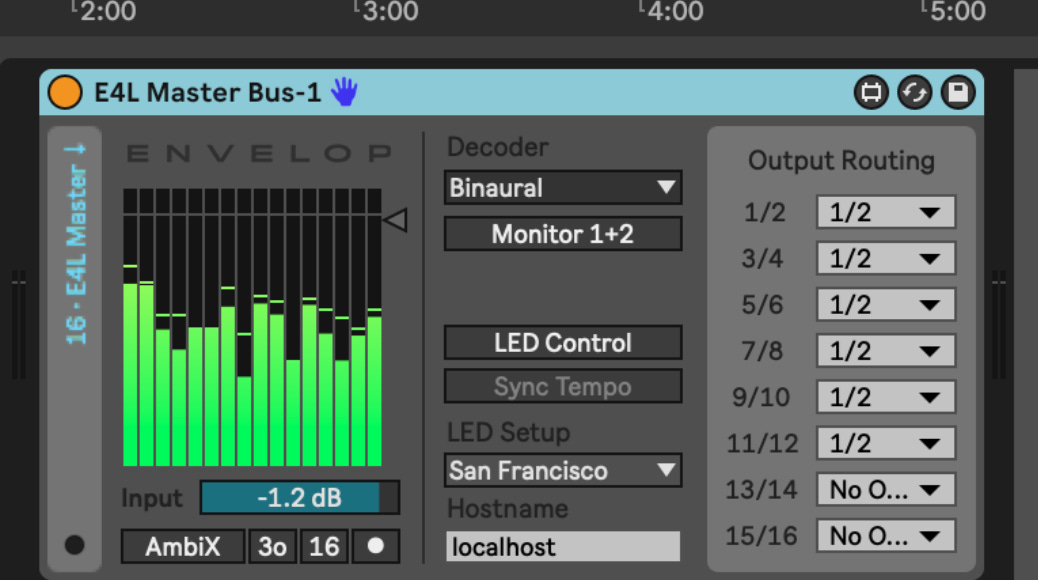

The E4L Master Bus - decoder

In the case of this project, I have decoded and bounced the project into the following format files;

Binaural: Wav. File for headphone playback.

Ambisonics: AIFF. File for playback on immersive speaker configurations - this file contains all the data information from the ambisonics decoder.